Social media platforms should separate fact from fiction

In order to separate fact from fiction, social media platforms need to directly moderate the content that their users post.

We live in an age of constant misinformation, where no one really knows what is real or what is fake.

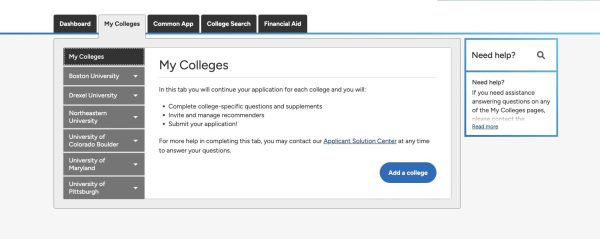

Social media has become a fast-growing source of news and information, with billions of people around the world using apps such as Facebook and Twitter to learn about current events and issues. The benefits of finding news on social media are easy to understand; the big companies of the day like CNN and MSNBC post around the clock, and this constant stream of information is easy to keep up with. For members of Gen Z, the ease of access that these services provide is another alluring quality.

However, the downside of these apps comes with their ability to be used to spread fake news and falsehoods. They can spread like wildfire; with former President Donald Trump being perhaps the most prominent example. Over the course of his presidency, he routinely marketed lies to his 88 million followers.

The big question, however, is are these social media giants responsible for moderating the content that users read?

The simple answer is yes; if these companies want to influence the spread of news and information, they have to combat fake news via content moderation; flagging it simply isn’t enough. Taking it down outright, while a controversial option, stems its spread immediately.

Questions about these companies and their inaction on fact-checking have been raised almost daily since the 2016 election—and Facebook’s failure to crack down on Russian interference through fake news. This could’ve directly influenced the outcome of said election and should rightly be remembered as a stain on Facebook’s public perception. Social media platforms have moderated content more frequently since then, but their efforts have not been wide enough to induce any kind of change.

However, this is not the first time in American history in which freedom of speech has come into question. Former Supreme Court Justice Oliver Wendell Holmes presided over a similar issue back in 1919, in the case Schenck v. United States. During World War I, various anti-war activists convinced draft-age men to resist enlisting in the armed forces; this was a direct violation of the Espionage Act of 1917.

The Court ruling saw the defendants of the trial, Charles Schenck and Elizabeth Baer, convicted for violating the aforementioned act. In his opinion, Holmes wrote that “The question in every case is whether the words used are used in such circumstances and are of such a nature as to create a clear and present danger that they will bring about the substantive evils that Congress has a right to prevent.”

In essence, if your words are judged as a “clear and present danger” to others, or the law, then you aren’t protected under the First Amendment. A clear example of this is Trump’s recent Twitter ban for tweets that were seen as urging his supporters to march on the Capitol; it soon became an armed insurrection against the powers that be. If his words aren’t a clear and present danger to society, then we don’t know what one is.

Sacha Baron Cohen, the actor who portrayed Borat in the 2006 film of the same name, as well as its sequel, spoke out about the issue in a 2019 speech at the Anti-Defamation League’s Never is Now summit. While there has been some outcry over Twitter’s censorship of some users, claiming that banning them violates their First Amendment rights, Cohen disagreed.

“This is not about limiting anyone’s free speech; this is about giving people, including some of the most reprehensible people on Earth, the biggest platform in history to reach a third of the planet,” he said. “Freedom of speech is not freedom of reach.”